The world wide web is a treasure trove of valuable data waiting to be tapped into. But how can you efficiently extract the information you need? Enter the realm of web scraping techniques! In the ever-evolving landscape of data mining, it’s crucial to stay ahead of the curve and master a range of methods to ensure success. Get ready to embark on a journey through the most effective web scraping techniques, best practices, and cutting-edge approaches for handling even the most complex websites. Your data extraction endeavors will never be the same!

Short Summary

- Master web scraping techniques to extract data from static and dynamic websites, leveraging libraries/APIs, OCR technology, headless browsers & DOM parsing.

- Follow ethical best practices when web scraping by respecting website owner’s stance on scraping & avoiding sensitive data.

- Utilize advanced programming techniques for successful extraction of data while minimizing captcha tests with adjusted request delays & rotating IPs.

Mastering Web Scraping Techniques

Web scraping, also known as data scraping, is a technique for extracting data from web pages, proving invaluable for eCommerce businesses, marketers, consultancies, academic researchers, and more. The world is generating an astounding 2.5 million bytes of data every day, and web scraping techniques offer access to this vast pool of information for commercial research and evaluation. By using the right tools to scrape data, you can unlock the full potential of this information, including scraping data for further analysis.

But how do you choose the right technique for your project? There are three main categories of web scraping techniques: manual web scraping, automated web scraping tools, and web scraping libraries and APIs. Each has its advantages and drawbacks, and the choice depends on your specific needs, resources, and the complexity of the website you’re scraping.

Let’s delve deeper into each of these popular web scraping techniques, including some common web scraping techniques.

Manual Web Scraping

Manual web scraping is the most basic form of data extraction, involving retrieving the entire webpage and then extracting the desired data by manually inspecting its HTML code for specific elements. While it’s time-consuming, manual scraping techniques can circumvent anti-bot defenses and may be appropriate for small-scale or one-time web scraping projects.

However, manual web scraping has its limitations. The laborious process of copying and pasting web content into a database can be prone to human error, and it may not be suitable for extracting data from multiple pages or complex websites. For these reasons, automated web scraping techniques are often preferred for larger projects.

Automated Web Scraping Tools

Automated web scraping tools, also known as web scrapers, streamline the data extraction process and are capable of handling large-scale projects. Web scraping software, such as ParseHub and Octoparse, extract data from both static and dynamic webpages through HTTP requests, resulting in increased entries in a shorter time frame.

While automated web scraping tools offer convenience and efficiency, they may face challenges when dealing with dynamic websites that rely on JavaScript or AJAX for content rendering. In such cases, advanced web scraping techniques like headless browsers and DOM parsing can be employed to successfully extract the desired data.

Web Scraping Libraries and APIs

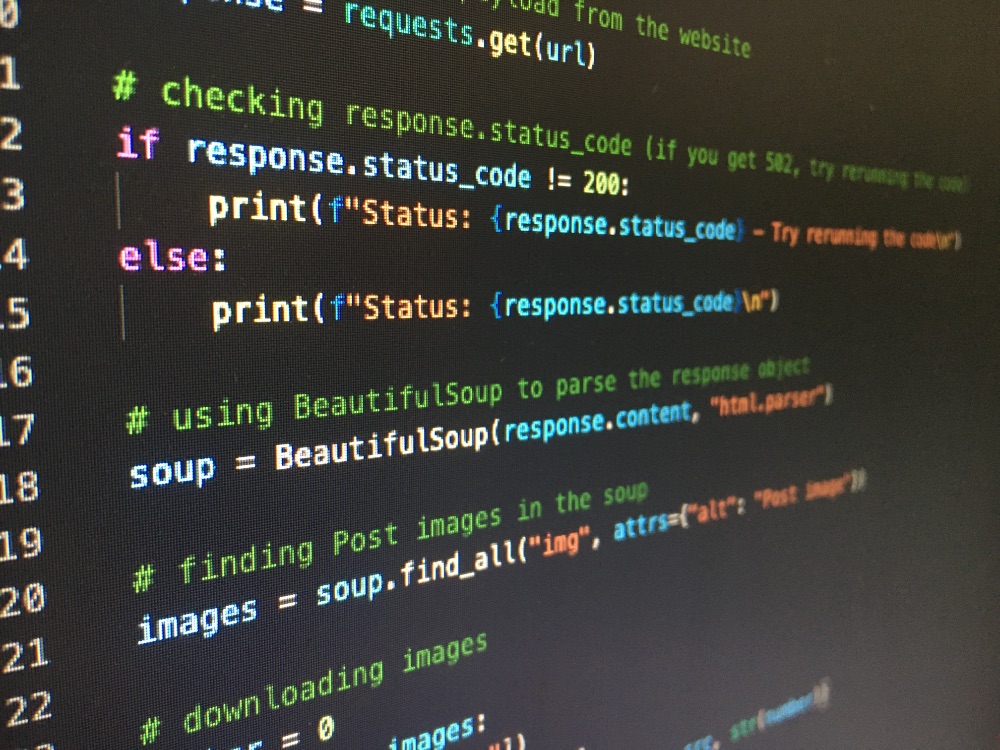

Web scraping libraries, such as Scrapy, Beautiful Soup, Selenium, and Puppeteer, provide pre-built functions and tools for web scraping tasks. On the other hand, web scraping APIs, like Smartproxy’s web scraping API, enable developers to access and extract pertinent data from websites with API calls, often including proxy features to avoid being blocked.

Utilizing web scraping libraries and APIs allows for more control over the scraping process and can be integrated into custom applications developed in popular programming languages like Python, JavaScript, and Ruby. This flexibility makes web scraping libraries and APIs ideal for those who require a more tailored approach to data extraction.

Advanced Web Scraping Techniques

As websites become more complex and sophisticated, so too must the web scraping techniques used to extract their valuable data. Advanced web scraping techniques encompass a variety of methods, including avoiding honeypot traps, solving captchas, using proxies, analyzing request rates, header inspection, pattern detection, running Splash scripts, and writing clean spiders.

These advanced techniques are essential for tackling challenging websites that implement anti-scraping measures or render content dynamically using JavaScript and AJAX, making traditional web scraping methods inadequate. Let’s explore some of these advanced techniques in detail.

Optical Character Recognition (OCR)

Optical Character Recognition (OCR) technology enables computers to recognize and interpret text from images or scanned documents, making it possible to extract text data from web pages containing images, scanned documents, and PDFs. OCR has its limitations, such as accuracy, speed, and cost, but it can be a valuable tool in your web scraping arsenal.

To ensure the best results when utilizing OCR for web scraping, it’s essential to guarantee accurate text extraction, use the correct OCR software for the job, and apply the appropriate settings to ensure accuracy. By incorporating OCR into your web scraping toolkit, you can successfully extract data from a wider range of sources.

Headless Browsers and DOM Parsing

Headless browsers, like Puppeteer and Selenium, execute without a graphical user interface and can access and extract data from dynamic and interactive websites that employ client-side or server-side scripting. These browsers can interact with page elements and extract data that may not be visible in the HTML code.

Document Object Model (DOM) parsing enables the conversion of HTML or XML documents to their associated DOM representation, allowing for the extraction of specific segments or the entire page.

By combining headless browsers and DOM parsing, you can extract data from complex websites that would otherwise be challenging with traditional web scraping techniques.

Handling AJAX and JavaScript Rendering

AJAX and JavaScript-rendered websites present unique challenges for web scraping, as their content is dynamically generated and may not be visible in the HTML source code. Furthermore, content may be loaded asynchronously, posing additional difficulties for scraping.

To effectively scrape AJAX and JavaScript-rendered websites, techniques such as headless browsers, DOM parsing, and web scraping libraries and APIs can be employed. By understanding the website structure and using the appropriate web scraping tool, you can overcome these challenges and extract the desired data from even the most complex and interactive websites.

Hybrid Web Scraping Approaches

Hybrid web scraping approaches combine the power of automated and manual techniques to ensure accuracy and completeness of scraped data. These approaches are particularly useful when dealing with complex websites that require both automated and manual methods to extract data effectively.

While hybrid web scraping can offer improved accuracy and the capability to process complex websites, it may require increased resources and time to complete, as well as the possibility of errors due to manual input. Nevertheless, a hybrid approach can be an invaluable asset when tackling intricate web scraping projects where neither automated nor manual techniques alone will suffice.

Best Practices for Web Scraping

When embarking on your web scraping journey, it’s essential to adhere to best practices and ethical considerations, such as ensuring the data gathered is used solely for its intended purpose and abstaining from any unethical practices. Respect website owners’ boundaries by examining their robots.txt file to determine their stance on web scraping and avoiding copyright infringement.

Scraping social media platforms comes with the responsibility of avoiding any sensitive information that could violate the user’s privacy. Identity theft is one example of such sensitive data that should be avoided when scraping. Schedule scraping requests at an appropriate interval, such as 10 seconds or more, and consider extracting data during off-peak hours to minimize the impact on website performance.

Overcoming Web Scraping Challenges

Web scraping challenges, such as dealing with Captchas, IP tracking, and logins, can hinder successful data extraction. To overcome these obstacles, employ open-source tools, advanced programming techniques, image recognition using machine learning or deep learning, and third-party services like DeathByCaptcha and AntiCaptcha to address captcha issues.

Minimize the frequency of captcha tests by adjusting the request delay time and utilizing rotating IPs. When logging in during web scraping, simulate keyboard and mouse operations to replicate the login process and gain access to information that is only available after logging in.

Data Analysis Techniques After Successful Scraping

Once you’ve successfully scraped your web data, it’s time to analyze it using various data analysis techniques, such as descriptive, diagnostic, predictive, and prescriptive analysis. Descriptive analysis evaluates a company’s Key Performance Indicators and generates revenue reports, while diagnostic analysis identifies causes and outcomes of specific data types and correlates them with relevant behaviors and patterns.

Predictive analysis utilizes data to predict potential outcomes, such as risk assessment and sales forecasting, while prescriptive analysis combines insights from other methods to identify the optimal course of action to address a problem or make a decision in a business setting.

Choosing the Right Web Scraping Tool

Selecting the right web scraping tool is crucial, as it enables you to quickly and effectively extract data from websites. When choosing a web scraping tool, consider the trustworthiness of the provider, cost, user-friendliness, scalability, and precision.

Tools like ParseHub and Octoparse are popular choices, with Octoparse offering a straightforward and free solution for web scraping. Ultimately, the choice depends on your specific needs, project requirements, and available resources. By carefully evaluating your options, you can ensure the success of your web scraping endeavors.

Summary

Web scraping is an invaluable tool for extracting a wealth of data from the vast expanses of the world wide web. By mastering a range of techniques, from manual to automated and advanced, you can overcome the challenges posed by complex websites and ensure the accuracy and completeness of your extracted data. With a solid understanding of best practices, ethical considerations, and data analysis techniques, you’ll be well-equipped to harness the power of web data for your business or research endeavors. So, are you ready to unlock the true potential of web scraping and tap into the wealth of information that awaits?

Frequently Asked Questions

What are the types of data scraping?

Data scraping is a technique that allows users to extract data from websites, legacy machines, and even generate reports. There are three main types of data scraping: report mining, screen scraping, and web scraping.

With these methods, users can obtain the data they need quickly and easily.

What is an example of data scraping?

An example of data scraping is when a web scraper is used to collect certain information from a website. This information can then be stored in a database for further analysis.

For instance, an online retailer may use web scrapers to extract product data from competitors’ websites for pricing comparison.

Is product scraping legal?

In general, product scraping is legal as long as it does not involve breaching any website’s terms of use or accessing data protected by copyright laws. However, companies should exercise caution when engaging in web scraping activities, as some countries have specific regulations on data privacy and misuse of data.