Python is one of the most popular programming languages for web scraping in 2025, and for good reason. Its simple syntax, extensive library ecosystem, and powerful data manipulation capabilities make it ideal for extracting information from websites. Whether you're collecting data for research, monitoring prices, or building datasets for machine learning, Python provides the tools you need.

In this comprehensive guide, we'll explore Python web scraping fundamentals and dive deep into the most popular libraries: Requests, Beautiful Soup, and lxml. You'll learn practical techniques through real-world examples and best practices for building robust scrapers.

What Is Web Scraping?

Web scraping is the automated process of extracting data from websites by sending HTTP requests and parsing the returned HTML content. Instead of manually copying and pasting information, web scrapers can collect thousands of data points in minutes.

Common web scraping applications include:

- Price monitoring: Track product prices across e-commerce sites

- Market research: Gather competitor data and industry trends

- Lead generation: Extract contact information from business directories

- Content aggregation: Collect news articles or social media posts

- Real estate data: Monitor property listings and market trends

- Job market analysis: Track job postings and salary information

Python excels at web scraping because of its rich ecosystem of libraries that handle HTTP requests, HTML parsing, and data processing. Let's explore the essential tools you'll need.

Prerequisites and Setup

Before we start, ensure you have Python 3.7 or higher installed. You can download it from the official Python website. We'll use Python 3 throughout this guide, as Python 2 has been deprecated since 2020.

Create a virtual environment for your scraping projects to manage dependencies cleanly:

python -m venv webscraping_env

source webscraping_env/bin/activate # On Windows: webscraping_env\Scripts\activateThe Requests Library: Your HTTP Foundation

The Requests library is the cornerstone of any Python web scraping project. It simplifies making HTTP requests and handling responses, providing a much cleaner interface than Python's built-in urllib module.

Installation and Basic Usage

Install Requests using pip:

pip install requestsHere's a basic example of fetching a webpage:

import requests

# Make a GET request

response = requests.get('https://httpbin.org/json')

# Check if request was successful

if response.status_code == 200:

print("Success!")

print(response.text)

else:

print(f"Failed with status code: {response.status_code}")Handling Headers and Sessions

Real web scraping often requires setting custom headers to mimic browser behavior:

import requests

# Set headers to mimic a real browser

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

response = requests.get('https://example.com', headers=headers)For websites that require login or maintain state, use sessions:

import requests

session = requests.Session()

session.headers.update({'User-Agent': 'MyBot 1.0'})

# Login

login_data = {'username': 'user', 'password': 'pass'}

session.post('https://example.com/login', data=login_data)

# Now make authenticated requests

response = session.get('https://example.com/protected-page')Error Handling and Timeouts

Always implement proper error handling and timeouts:

import requests

from requests.exceptions import RequestException, Timeout

try:

response = requests.get('https://example.com', timeout=10)

response.raise_for_status() # Raises an HTTPError for bad responses

print(response.text)

except Timeout:

print("Request timed out")

except RequestException as e:

print(f"Request failed: {e}")Beautiful Soup: Parsing HTML Made Easy

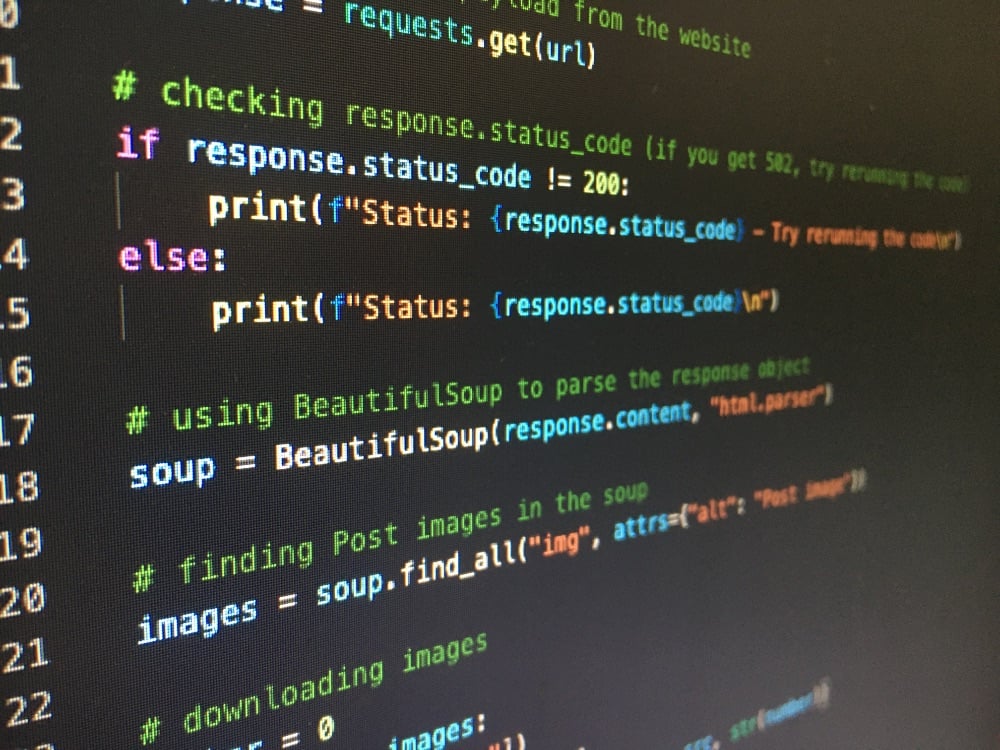

Beautiful Soup transforms complex HTML into a navigable Python object tree. It's perfect for extracting specific elements from web pages. For more detailed questions and answers about Beautiful Soup, check out our Beautiful Soup FAQ section.

Installation and Basic Parsing

Install Beautiful Soup 4:

pip install beautifulsoup4Basic HTML parsing example:

import requests

from bs4 import BeautifulSoup

# Fetch and parse a webpage

response = requests.get('https://quotes.toscrape.com/')

soup = BeautifulSoup(response.text, 'html.parser')

# Extract the page title

print(soup.title.text)

# Find all quotes

quotes = soup.find_all('div', class_='quote')

for quote in quotes:

text = quote.find('span', class_='text').text

author = quote.find('small', class_='author').text

print(f'"{text}" - {author}')Advanced Selection Techniques

Beautiful Soup offers multiple ways to find elements:

from bs4 import BeautifulSoup

html = """

<div class="product">

<h2 id="product-title">Laptop</h2>

<span class="price" data-currency="USD">$999</span>

<div class="specs">

<ul>

<li>16GB RAM</li>

<li>512GB SSD</li>

</ul>

</div>

</div>

"""

soup = BeautifulSoup(html, 'html.parser')

# Find by tag

title = soup.find('h2').text

# Find by class

price = soup.find('span', class_='price').text

# Find by attribute

price_element = soup.find('span', {'data-currency': 'USD'})

# Find by CSS selector

specs = soup.select('.specs li')

spec_list = [spec.text for spec in specs]

# Find by ID

product_title = soup.find(id='product-title').text

print(f"Product: {title}")

print(f"Price: {price}")

print(f"Specs: {spec_list}")Practical Example: Scraping Book Information

Let's build a scraper for a book catalog:

import requests

from bs4 import BeautifulSoup

import csv

def scrape_books():

base_url = 'http://books.toscrape.com/catalogue/page-{}.html'

books = []

for page in range(1, 6): # Scrape first 5 pages

url = base_url.format(page)

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Find all book containers

book_containers = soup.find_all('article', class_='product_pod')

for book in book_containers:

# Extract book details

title = book.find('h3').find('a')['title']

price = book.find('p', class_='price_color').text

rating = book.find('p', class_='star-rating')['class'][1]

availability = book.find('p', class_='instock availability').text.strip()

books.append({

'title': title,

'price': price,

'rating': rating,

'availability': availability

})

return books

# Save to CSV

books = scrape_books()

with open('books.csv', 'w', newline='', encoding='utf-8') as file:

writer = csv.DictWriter(file, fieldnames=['title', 'price', 'rating', 'availability'])

writer.writeheader()

writer.writerows(books)

print(f"Scraped {len(books)} books and saved to books.csv")lxml: High-Performance XML and HTML Processing

lxml is a powerful library for processing XML and HTML documents. It's faster than Beautiful Soup and offers XPath support, making it ideal for complex data extraction tasks. Explore our lxml FAQ section for advanced techniques and troubleshooting.

Installation and Basic Usage

Install lxml:

pip install lxmlBasic HTML parsing with lxml:

import requests

from lxml import html

response = requests.get('https://quotes.toscrape.com/')

tree = html.fromstring(response.content)

# Extract quotes using XPath

quotes = tree.xpath('//div[@class="quote"]//span[@class="text"]/text()')

authors = tree.xpath('//div[@class="quote"]//small[@class="author"]/text()')

for quote, author in zip(quotes, authors):

print(f'"{quote}" - {author}')XPath Expressions

XPath provides powerful selection capabilities:

from lxml import html

# Sample HTML

html_content = """

<div class="container">

<div class="product" data-id="123">

<h2>Smartphone</h2>

<span class="price">$599</span>

<div class="features">

<span class="feature">5G</span>

<span class="feature">128GB</span>

</div>

</div>

</div>

"""

tree = html.fromstring(html_content)

# XPath examples

product_name = tree.xpath('//h2/text()')[0]

price = tree.xpath('//span[@class="price"]/text()')[0]

features = tree.xpath('//span[@class="feature"]/text()')

product_id = tree.xpath('//div[@class="product"]/@data-id')[0]

print(f"Product: {product_name}")

print(f"Price: {price}")

print(f"Features: {features}")

print(f"ID: {product_id}")Choosing the Right Parser

Different parsers have different strengths:

| Parser | Speed | Lenient | External Dependency |

| html.parser | Fast | Yes | No |

| lxml | Very Fast | Yes | Yes |

| html5lib | Slow | Very Lenient | Yes |

from bs4 import BeautifulSoup

html = "<div><p>Unclosed paragraph<div>Nested incorrectly</div>"

# Different parsers handle malformed HTML differently

soup1 = BeautifulSoup(html, 'html.parser')

soup2 = BeautifulSoup(html, 'lxml')

soup3 = BeautifulSoup(html, 'html5lib')

print("html.parser:", soup1)

print("lxml:", soup2)

print("html5lib:", soup3)Best Practices and Common Pitfalls

Respect robots.txt

Always check a website's robots.txt file before scraping:

import urllib.robotparser

def can_scrape(url, user_agent='*'):

rp = urllib.robotparser.RobotFileParser()

rp.set_url(f"{url}/robots.txt")

rp.read()

return rp.can_fetch(user_agent, url)

# Check before scraping

if can_scrape('https://example.com'):

print("Scraping allowed")

else:

print("Scraping not allowed")Handle Rate Limiting

Implement delays and exponential backoff:

import time

import random

from requests.adapters import HTTPAdapter

from urllib3.util.retry import Retry

def create_session_with_retries():

session = requests.Session()

# Define retry strategy

retry_strategy = Retry(

total=3,

backoff_factor=1,

status_forcelist=[429, 500, 502, 503, 504],

)

adapter = HTTPAdapter(max_retries=retry_strategy)

session.mount("http://", adapter)

session.mount("https://", adapter)

return session

# Use the session

session = create_session_with_retries()

urls = ['https://example.com/page1', 'https://example.com/page2']

for url in urls:

response = session.get(url)

# Process response

time.sleep(random.uniform(1, 3)) # Random delayData Validation and Cleaning

Always validate and clean scraped data:

import re

def clean_price(price_text):

"""Extract numeric price from text"""

if not price_text:

return None

# Remove currency symbols and whitespace

cleaned = re.sub(r'[^\d.]', '', price_text)

try:

return float(cleaned)

except ValueError:

return None

def clean_text(text):

"""Clean and normalize text"""

if not text:

return ""

# Remove extra whitespace and normalize

return ' '.join(text.split())

# Example usage

raw_price = "$1,299.99"

clean_price_value = clean_price(raw_price) # 1299.99

raw_description = " This is a product description "

clean_description = clean_text(raw_description) # "This is a product description"Advanced Techniques

Handling JavaScript-Rendered Content

Some sites load content dynamically with JavaScript. For these cases, consider using Selenium:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from bs4 import BeautifulSoup

# Setup headless Chrome

options = webdriver.ChromeOptions()

options.add_argument('--headless')

driver = webdriver.Chrome(options=options)

try:

driver.get('https://example-spa.com')

# Wait for dynamic content to load

wait = WebDriverWait(driver, 10)

wait.until(EC.presence_of_element_located((By.CLASS_NAME, 'dynamic-content')))

# Get page source and parse with Beautiful Soup

soup = BeautifulSoup(driver.page_source, 'html.parser')

# Extract data as usual

data = soup.find_all('div', class_='item')

finally:

driver.quit()Concurrent Scraping

Use asyncio and aiohttp for faster scraping:

import asyncio

import aiohttp

from bs4 import BeautifulSoup

async def fetch_url(session, url):

async with session.get(url) as response:

return await response.text()

async def scrape_multiple_urls(urls):

async with aiohttp.ClientSession() as session:

tasks = [fetch_url(session, url) for url in urls]

responses = await asyncio.gather(*tasks)

results = []

for response in responses:

soup = BeautifulSoup(response, 'html.parser')

# Extract data from each page

title = soup.find('title')

results.append(title.text if title else 'No title')

return results

# Usage

urls = ['https://example.com/page1', 'https://example.com/page2']

results = asyncio.run(scrape_multiple_urls(urls))Conclusion

Python provides an excellent foundation for web scraping with its rich ecosystem of libraries. Here's a quick recap:

- Requests: Use for making HTTP requests and handling sessions

- Beautiful Soup: Perfect for simple to moderate HTML parsing tasks

- lxml: Choose for high-performance parsing and complex XPath queries

- html.parser: Good for basic parsing without external dependencies

Remember to always:

- Respect websites' terms of service and robots.txt

- Implement proper error handling and timeouts

- Add delays between requests to avoid overwhelming servers

- Validate and clean your scraped data

- Consider the legal and ethical implications of your scraping activities

With these tools and techniques, you're well-equipped to build robust web scrapers for any data collection project. Start with simple examples and gradually work your way up to more complex scenarios as you gain experience.

Related FAQ Resources

For more specific questions and detailed tutorials, explore these FAQ sections:

- Beautiful Soup FAQ - HTML parsing, element selection, and data extraction techniques

- Requests FAQ - HTTP requests, sessions, authentication, and error handling

- lxml FAQ - XPath queries, XML parsing, and high-performance processing

- Scrapy FAQ - Advanced web scraping framework for large-scale projects

- Selenium FAQ - Browser automation for JavaScript-heavy websites

- Python FAQ - General Python web scraping techniques and best practices