Picture this: you are on a mission to extract valuable data from the vast ocean of the internet. Yet, the task appears daunting. Worry not, as Rust, a powerful and efficient programming language, comes to your rescue. With its performance, memory safety, and parallelism, Rust is your trusty companion in your web scraping journey. Ready to embark on this thrilling adventure with rust libraries for web scraping? Let’s get started!

Key Takeaways

- Rust Libraries for Web Scraping such as Reqwest, Scraper and Headless_Chrome enable efficient data extraction.

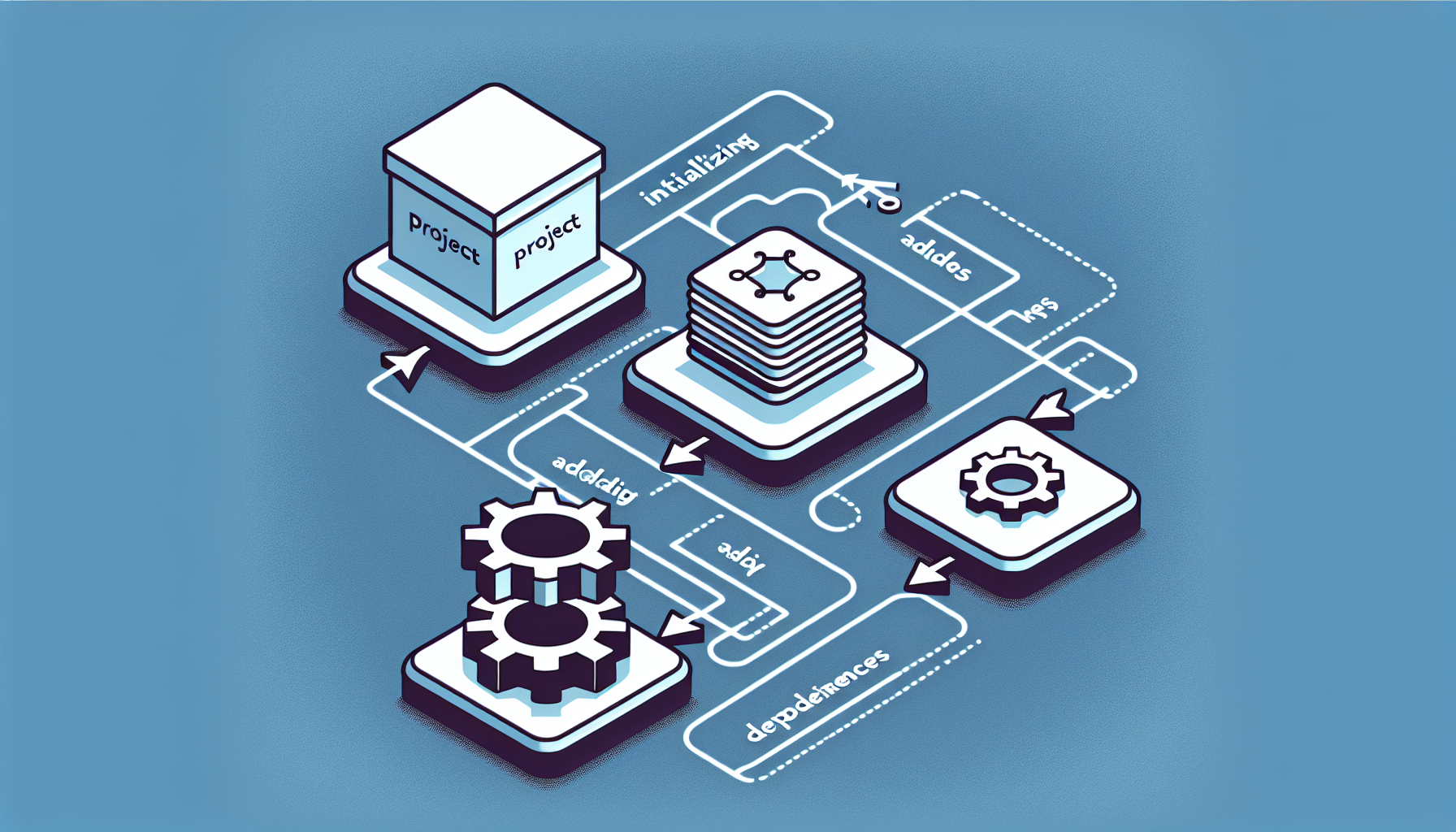

- Setting up a Rust web scraping project involves initializing the project with Cargo, adding dependencies to the TOML file and configuring Visual Studio Code for development.

- Advanced strategies can help manage challenges like CAPTCHAs and IP blocking while adapting to website changes helps ensure a smooth experience.

Rust Libraries for Web Scraping

In the world of the Rust programming language, three musketeers stand out for web scraping: Reqwest, Scraper, and Headless_Chrome. These rust scraper tools are like the paintbrushes to an artist, allowing you to extract, parse, and navigate HTML elements on the web.

We’ll examine their unique capabilities in more depth.

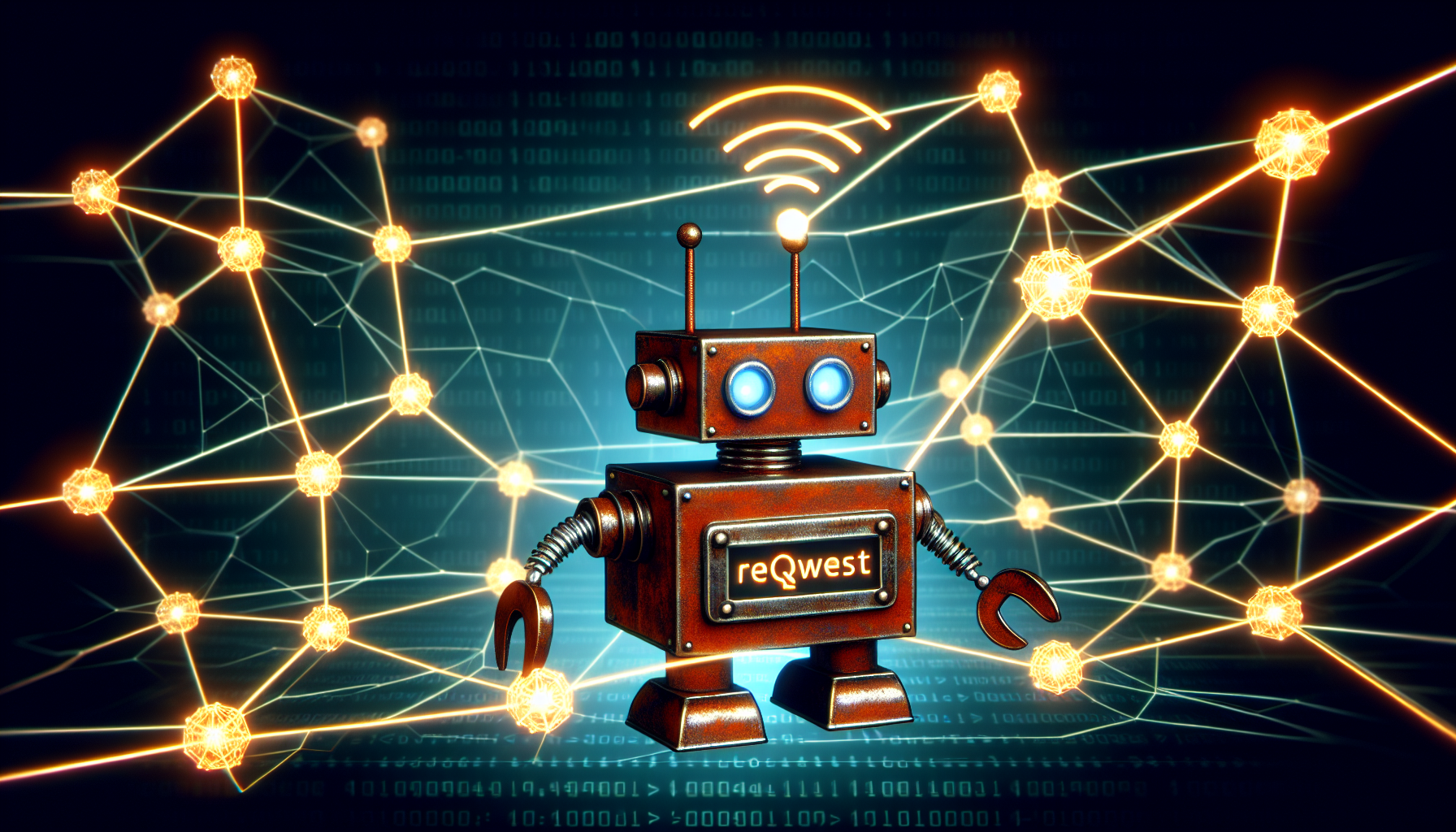

Reqwest

Imagine a courier service delivering packages from the world wide web to your Rust project. That’s Reqwest for you. A robust asynchronous HTTP client library, Reqwest sends HTTP requests and delivers the content of web pages right to your doorstep.

So, be it fetching a document, posting a form, or even following redirects and handling cookies, Reqwest is your reliable assistant.

Scraper

Once we receive our package, a tool to open it becomes necessary. Meet Scraper, a Rust library adept at web scraping with Rust, parsing HTML and XML documents. Like a skilled miner extracting precious gems, Scraper uses CSS selectors to extract specific data from web pages. But remember, Scraper is not a courier; it doesn’t handle requests, it focuses on parsing the content delivered by Reqwest.

Headless_Chrome

Sometimes, we encounter packages that are a bit more challenging to open. They may have JavaScript-based seals that ordinary tools like Scraper can’t handle. This is where Headless_Chrome steps in. It’s a Rust library that controls Chrome browsers in headless mode, allowing us to interact with JavaScript-heavy sites and extract data.

Think of it as a master locksmith, capable of opening even the most complex locks.

Setting Up Your Rust Web Scraping Project

Armed with our tools, it’s time to set up our workshop - the Rust web scraping project. We’ll use Cargo, Rust’s package manager, to initialize our project and manage our dependencies. We’ll also configure our workspace in Visual Studio Code for Rust development.

Are you set to invest your effort and dive into the task?

Initializing the Project with Cargo

Initiating a new Rust project with Cargo is quite straightforward. It’s like setting up a new canvas for our masterpiece. With a simple command, we get a ready-to-go Rust project structure, complete with a Cargo.toml file to declare our dependencies, and a src directory for our code.

Adding Dependencies to the TOML File

Next, we’ll add our tools to our toolbox. In the Cargo.toml file, we declare our dependencies - Reqwest and Scraper. It resembles gathering all necessary materials for our artwork, making sure we’re well-equipped before we commence our creation.

Configuring Visual Studio Code for Rust Development

Before we start painting, we need a comfortable and efficient workspace. By configuring Visual Studio Code for Rust development, we ensure that our workspace is set up for success. With recommended extensions and settings, we’ll have everything at our fingertips, ready to create our web scraping masterpiece.

Building a Basic Web Scraper with Rust

With our workspace set up, we’re finally ready to start painting. We’ll build a basic web scraper using our tools - Reqwest for sending HTTP requests and fetching web page content, and Scraper for parsing the HTML documents and extracting the desired elements.

Let’s commence!

Sending HTTP Requests with Reqwest

Our initial steps involve dispatching HTTP requests to acquire web page content. Reqwest, our reliable courier, is perfect for this job. With its ability to send both GET and POST requests, we can easily fetch the HTML code we need to parse.

Parsing HTML Documents with Scraper

With the HTML code in hand, it’s time to extract the valuable data we need from the HTML document. That’s where Scraper comes in. Like a jeweler inspecting a precious gem, Scraper parses the HTML documents, allowing us to extract specific data from web pages using CSS selectors and the css selector technique.

Storing and Exporting Scraped Data

Upon extraction of the vital data, appropriate storage becomes imperative. We’ll store our scraped data in custom data structures and then export it to various formats like CSV.

It’s like storing our valuable gems in a safe and then showcasing them in a beautiful jewelry box.

Handling Dynamic Content and JavaScript in Rust Web Scraping

Web scraping can often present challenges. Sometimes, we encounter dynamic content and JavaScript, which can be a bit tricky to handle. But don’t fret! With the right tools and strategies, we can overcome these challenges.

Let’s examine the methods.

Using Headless Browsers

JavaScript-based sites may have complex locks that our usual tools can’t open. That’s when we call in our master locksmith, Headless_Chrome. By controlling Chrome browsers in headless mode, Headless_Chrome can interact with JavaScript-heavy sites and extract the data we need.

Alternative Techniques for Dynamic Content

Sometimes, even a master locksmith might need some help. For such situations, we have other techniques for handling dynamic content, like using APIs or server-side rendering.

Having a few more tools in our toolbox is always beneficial, right?

Web Crawling with Rust

Post data extraction from a singular page, we may desire to venture further. That’s where web crawling comes in. With Rust, we can create web crawlers to collect and process data from multiple pages or websites.

Let’s see how.

Implementing a Simple Crawler

Building a simple web crawler in Rust is like assembling a robot that can explore the world on its own. It gathers links, navigates through websites, and collects the data we need. Let’s get our robot up and running!

Advanced Crawling Strategies

As our robot ventures further, it might encounter challenges like pagination and duplicate requests. But with advanced crawling strategies, we can equip our robot to handle these challenges effortlessly.

Eager to enhance your robot with a new programming language?

Overcoming Web Scraping Challenges in Rust

Web scraping doesn’t always proceed without obstacles. Challenges such as CAPTCHAs and IP blocking can disrupt our operations. But fear not! With the right strategies and tools, we can overcome these challenges and keep our web scraping project on track.

Dealing with CAPTCHAs and IP Blocking

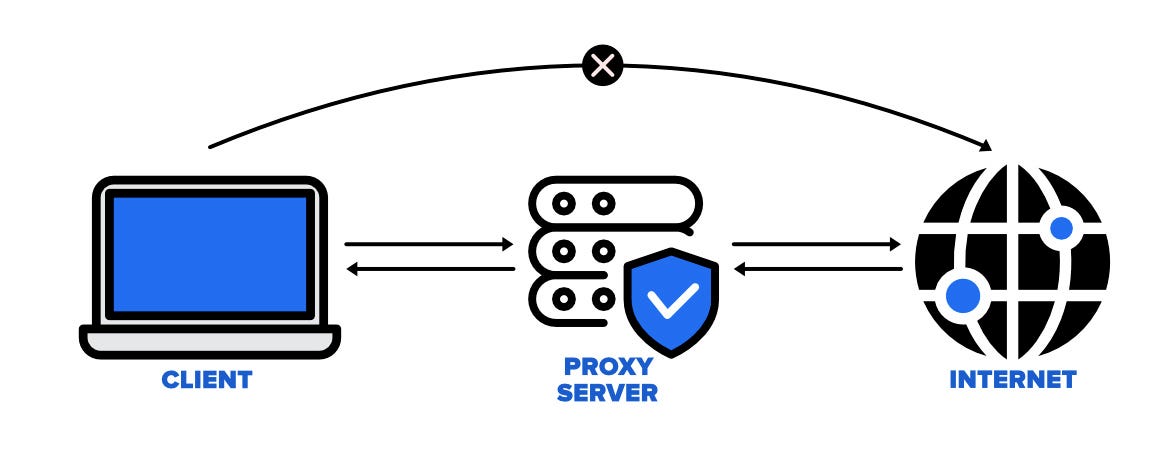

CAPTCHAs and IP blocking are like the guards protecting the treasure we seek. But with strategies like using rotating proxies and CAPTCHA-solving libraries, we can outsmart these guards and gain access to the treasure - our valuable data.

Adapting to Website Changes

Similar to the ever-changing ocean tides, websites too undergo alterations. Updating CSS selectors, handling redirects, and constantly monitoring the website structure can help us adapt to these changes and continue our web scraping journey without a hitch.

Summary

As our web scraping journey comes to a close, let’s look back at the path we’ve traveled. We’ve learned how to use Rust for web scraping, how to handle dynamic content and JavaScript, how to build web crawlers, and how to overcome common web scraping challenges. With these essential tools and strategies, you’re now equipped to navigate the vast sea of the internet and extract the valuable data you need.

Frequently Asked Questions

Which library to use for web scraping?

For web scraping, popular libraries such as Requests, BeautifulSoup, Scrapy and Selenium should be utilized to ensure optimal results with no artifacts.

Can you get banned for web scraping?

Yes, you can get banned for web scraping if you make too many requests in a short amount of time. To avoid detection, consider implementing a delay between requests and mimicking human browsing behavior.

Is web scraping API legal?

In the United States, web scraping is generally considered to be legal as long as the scraped data is publicly available and the scraping activity does not harm the website being scraped. However, any actions that involve scraping copyrighted content or personal information without consent, or activities that disrupt a website’s normal functioning, may be deemed illegal.

How can a user incorporate a proxy into their Rust web scraping program using Reqwest?

To use a proxy in their Rust web scraping program with Reqwest, the user must acquire the proxy link and incorporate it into their client as a HTTP and HTTPS requests proxy.

How can I integrate the Rust compiler with Visual Studio Code?

To integrate Rust with Visual Studio Code, install the rust-analyzer extension from the Extensions view.